One of the biggest advantages in this Automator’s eyes of using Amazon’s S3 service for file storage is its ability to interface directly with the Lambda service. If you’re not familiar with AWS Lambda, it’s essentially code execution in the cloud. There are no servers to manage nor even a terminal window! Lambda is a window that gives you the ability to run code without concerning yourself with anything else.

One of the coolest features of Lambda though is its ability to natively integrate with other AWS services. In Lambda, your code is broken down into function. Each function contains not only the code to execute but also what action will trigger that code as well as other execution options. That concept is powerful. Think about it. Running Python, Node.JS, etc. code without having to worry about the infrastructure, but if you’re forced to kick off that code by logging into the AWS management console all the time, it’s not too useful.

Lambda allows you to define various triggers which can be hundreds of different events provided by dozens of different event sources. These events can then be subscribed to act as a trigger to kick off one or more Lambda functions. In our case, we’re going to use the S3 event provider. One of the most common event providers to act as Lambda triggers is the S3 service. Events are being fired all of the time in S3 from new files that are uploaded to buckets, files being moved around, deleted, etc. All of this activity fires events of various types in real-time in S3.

Fortify your cloud across every critical dimension.

- Efficiency + Optimization

- Security + Control

- Orchestration + Visibility

Setting up the Lambda S3 Role

When executed, Lambda needs to have permission to access your S3 bucket and optionally to CloudWatch if you intend to log Lambda activity. Before you get started building your Lambda function, you must first create an IAM role which Lambda will use to work with S3 and to write logs to CloudWatch. This role should be set up with the appropriate S3 and CloudWatch policies. An example of such a policy is below. This policy gives the role access to my CloudWatch logs and gives full authority to S3.

"Version": "2012-10-17",

"Statement": [

{

"Effect": "Allow",

"Action": [

"logs:*"

],

"Resource": "arn:aws:logs:*:*:*"

},

{

"Effect": "Allow",

"Action": [

"s3:GetObject",

"s3:PutObject"

],

"Resource": "arn:aws:s3:::*"

}

],

"Statement": [

{

"Effect": "Allow",

"Action": "s3:*",

"Resource": "*"

}

]

}

You’ll need to create this role and give it a name (such as LambdaS3 or whatever you’d like).

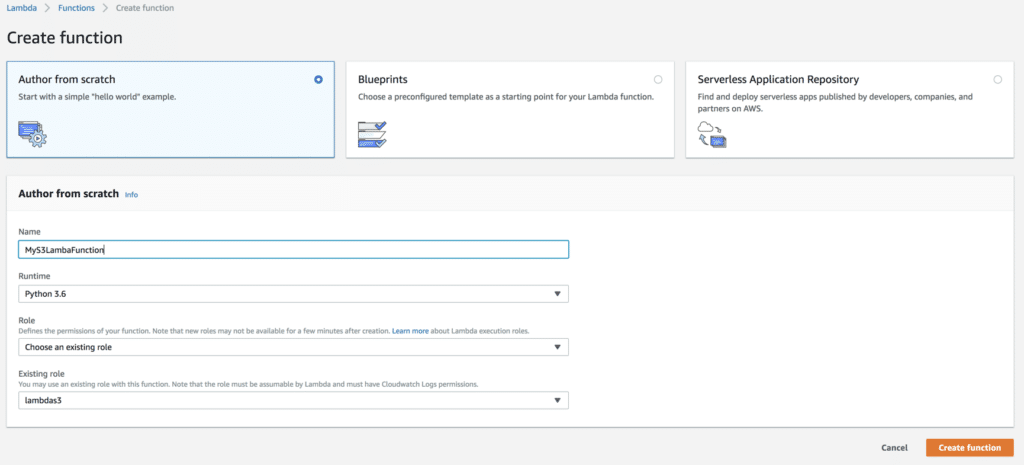

Creating the Lambda Function – Adding Code

Once you have the role set up, you’ll then need to create the function. To do that, you’ll browse to Lambda and click Create Function and you will be presented with the screen below where you can see I’m using the role that was just previously created.

For this example, I’ll be using Python 3.6 as the run-time, but you can use whatever language you’d like to execute upon an S3 event. Our example code isn’t going to do much. It will only prove that it ran when an S3 event happen. In the example below, I’m using the builtin event Python dictionary and referencing the S3 key that initiated the event and printing it out. This output will show up in the CloudWatch Logs.

def handler(event, context):

sourceKey = event['Records'][0]['s3']['object']['key']

print(sourceKey)Creating the Lambda Function – Adding the Trigger

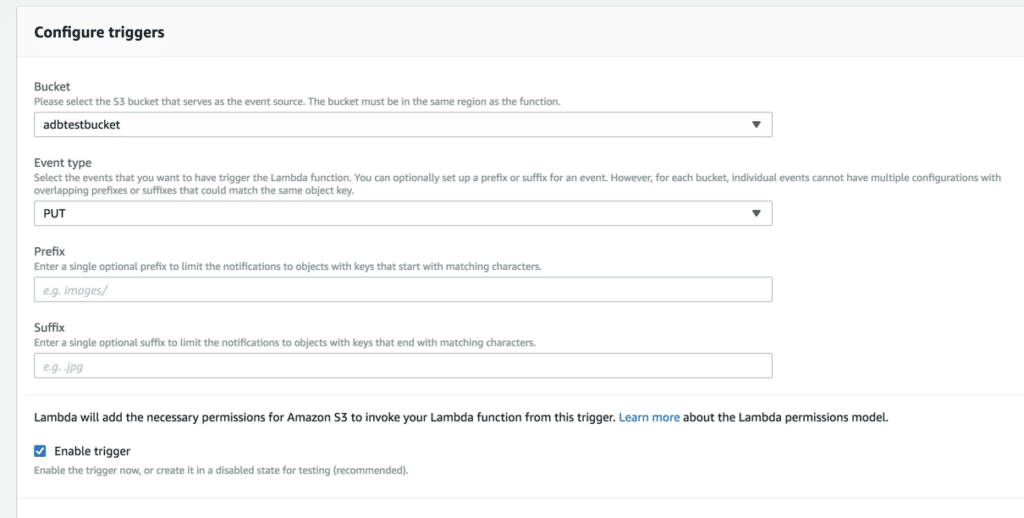

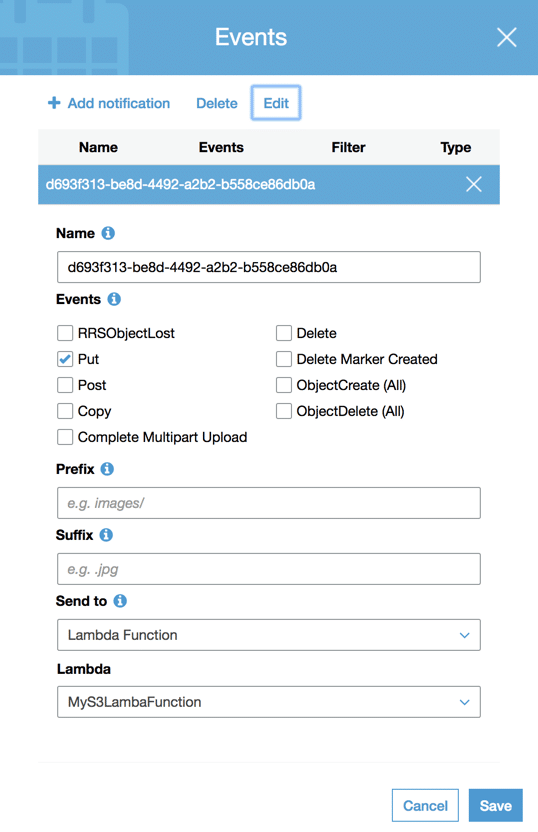

Once I have the code you’ll be using inside of the function, I’ll then create the S3 trigger be selecting it on the left-hand side. Since I want to trigger off of new uploads, I’ll create this event to trigger off of the PUT event.

You can optionally choose a prefix or suffix if you decide to narrow down the filter criteria but for this example, let’s execute this Lambda function on every file that’s uploaded into the adbtestbucket bucket.

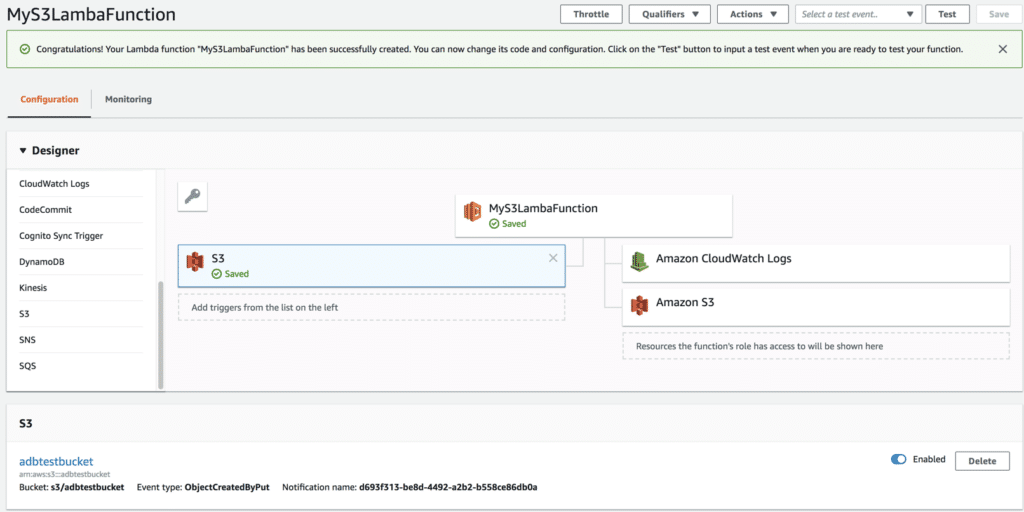

Once created, your Lambda function will look similar to below.

What’s not too clear is that when you create the S3 trigger, this actually creates an event that’s registered to your S3 bucket. If I go to my S3 bucket’s properties, you can see that I now have a registered event using all of the configured options used in the Lambda window.

Checking CloudWatch

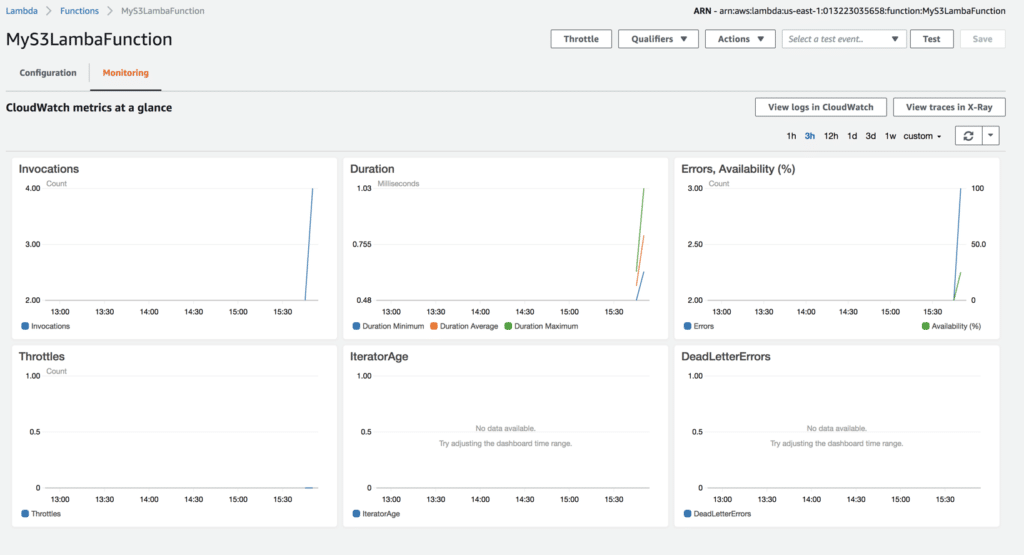

By default, Lambda will write function activity to CloudWatch. This is why the role that was created earlier had to get access to CloudWatch. When a new file is uploaded to the S3 bucket that has the subscribed event, this should automatically kick off the Lambda function. To confirm this, head over to CloudWatch or click on the Monitoring tab inside of the function itself. This initial view shows a lot of great information about the function’s execution.

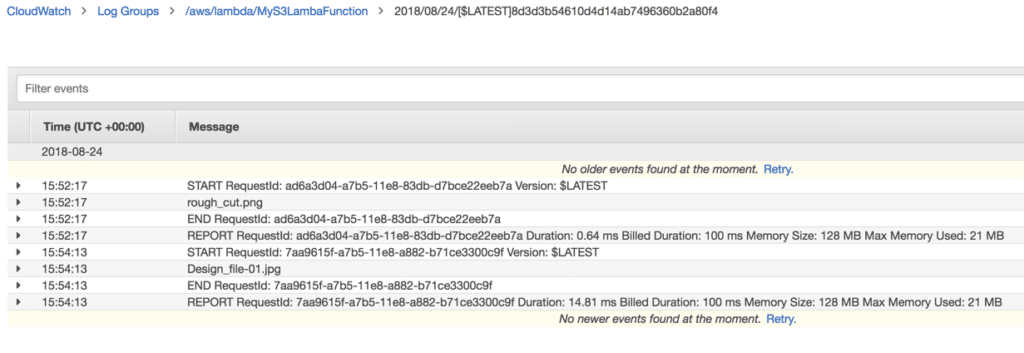

However, it does not show granular details like the output from that print statement we had in the Python code. To see this information, you must click on View CloudWatch Logs where you’ll see various log streams. Clicking on the log stream that matches the appropriate date, you can then see when the function started, any console output that your code produced and when it stopped.

Notice in my example, you see rough_cut.png and Design_file-01.jpg. These are the files that I uploaded directly to the adbtestbucket bucket to demonstrate the function being triggered. If I see this, I know it worked!

Summary

This Lambda function consists of a role with appropriate rights to both the S3 service and CloudWatch, the code to execute and finally the event trigger. Combine all of these objects, and you have a ton of potential to perform even the most complicated of automation tasks in AWS.

If you need to automate various processes in S3, Lambda is an excellent service to do that with. You have the ultimate flexibility of when to trigger code of several different languages (Python, Node.JS, .NET, etc.) at intervals or based on any event you choose.

I used this exact approach just covered to rename files as they were uploaded to S3 automatically. This prevented human error and allowed users to not worry about what to name the files they are uploading. It saved a ton of time!

Looking for an AWS Data Protection solution? Try N2WS Backup & Recovery FREE!

Jessica is Senior Global Campaigns Manager at N2WS with more than 10 years of experience. Jessica enjoys very spicy foods, lifting heavy things and cold snowy mountains (even though she lives near the arid desert).