Why Use Cold Storage with AWS Backup?

AWS Backup is a managed backup service that centralizes data backup across AWS services, including Amazon EBS, Amazon RDS, DynamoDB, Amazon S3, and more. It offers a single interface to manage backup scheduling, retention, and policies.

Cold storage is essential for organizations that need to retain backups for long periods without incurring high storage costs. Many compliance frameworks require keeping data for years, even if it is rarely accessed. Storing such data in standard storage classes can quickly become expensive.

Cold storage tiers like Amazon S3 Glacier and Glacier Deep Archive allow backups to be retained at a fraction of the cost, making long-term retention financially viable. AWS Backup supports lifecycle policies to automatically move backups from warm to cold storage, but configuring and optimizing these transitions is not trivial.

Administrators must balance cost savings against retrieval times and ensure that retention policies align with regulatory requirements. Additionally, restoring data from cold storage can involve complex orchestration and delays, requiring careful planning for disaster recovery scenarios.

In this article:

- Cold Storage Options in AWS

- Backup Cost for Cold Storage vs. Warm Storage Pricing in Amazon S3

- Tutorial: Archive Amazon EC2 Backup to Amazon S3 Glacier

- Best Practices for AWS Backup Cold Storage

Cold Storage Options in AWS

Amazon S3 Glacier Storage

Amazon S3 Glacier and S3 Glacier Deep Archive are cold storage classes for long-term data retention at minimal cost. They are suitable for backups, archives, and compliance data that is rarely accessed but must be stored for extended periods.

S3 Glacier supports three retrieval options: expedited (1–5 minutes), standard (3–5 hours), and bulk (5–12 hours), giving flexibility based on urgency and cost. S3 Glacier Deep Archive is even cheaper, intended for data accessed once or twice a year, with retrieval times ranging from 12 to 48 hours.

Lifecycle policies can automatically transition objects from standard S3 storage to Glacier tiers based on access patterns. Both classes are integrated with AWS Backup, allowing organizations to manage cold storage as part of a broader data protection strategy.

Amazon EBS Cold HDD Volumes

While AWS Backup cold storage typically refers to S3 Glacier tiers, it’s worth noting that Amazon also offers EBS Cold HDD (sc1) volumes as a low-cost storage option.

These are designed for large, sequential workloads—think log data, infrequently accessed datasets, or backups that still need to sit on EBS for quick reattachment. At around $0.015/GB-month, sc1 is cheaper than other EBS options but still more expensive than Glacier or Deep Archive.

⚠️ Important distinction: sc1 volumes are not archival storage. They remain live block storage attached to EC2 and don’t provide the same long-term cost savings or durability guarantees as S3-based cold storage. If your goal is compliance retention or cutting backup costs by 85–98%, Glacier tiers are the right tool.

In short:

- Use sc1 volumes when you need cheap, slow EBS storage for ongoing workloads.

- Use S3 Glacier tiers when you need true cold storage for AWS Backup archives.

Related content: Read our guide to EBS volume

Backup Cost for Cold Storage vs. Warm Storage Pricing in Amazon S3

S3 Warm Storage Pricing

S3 warm storage classes like S3 Standard and S3 Standard-Infrequent Access (S3 Standard-IA) are designed for frequently or occasionally accessed data. In the US East (N. Virginia) region, S3 Standard costs $0.023 per GB for the first 50 TB per month, with prices decreasing slightly for higher usage tiers. S3 Standard-IA is optimized for infrequently accessed data requiring millisecond retrieval, priced at $0.0125 per GB per month. However, it has a minimum storage duration of 30 days and charges for retrievals at $0.01 per GB.

Requests and lifecycle transitions also add to the cost. PUT, COPY, and POST operations in these tiers cost $0.005 per 1,000 requests, while GET and SELECT requests cost $0.0004 per 1,000 requests. Lifecycle transitions to S3 Standard-IA incur an additional $0.01 per 1,000 requests.

S3 Cold Storage Pricing

Cold storage options like S3 Glacier Flexible Retrieval (formerly Glacier) and S3 Glacier Deep Archive offer significantly lower storage costs for long-term backups. Glacier Flexible Retrieval costs $0.0036 per GB per month, while Deep Archive reduces this further to $0.00099 per GB. These classes are ideal for data that can tolerate retrieval times ranging from minutes to hours.

Retrievals from Glacier classes incur additional charges. For Glacier Flexible Retrieval, standard retrievals cost $0.01 per GB and expedited retrievals cost $0.03 per GB, with a $10.00 per 1,000 requests fee for expedited operations. Deep Archive retrievals are priced at $0.02 per GB for standard retrieval and $0.0025 per GB for bulk retrievals. Both storage classes enforce minimum storage durations: 90 days for Glacier Flexible Retrieval and 180 days for Deep Archive.

How Much You Can Save with Cold Storage

Shifting infrequently accessed backups from warm to cold storage can cut storage costs by over 85%. For example, storing 1 TB of data in S3 Standard costs approximately $23/month, while Glacier Flexible Retrieval reduces this to $3.60/month and Deep Archive lowers it further to $1/month. These savings scale with data size and retention duration, making cold storage tiers particularly cost-effective for archival use cases where retrieval latency is acceptable.

That’s the difference between paying $276 a year to keep 1 TB in S3 Standard vs. just $12 a year in Deep Archive. In other words—you could fund your team’s pizza Fridays instead of your backup bill.

- Pre-stage metadata for faster restores: When using Glacier or Deep Archive, maintain a lightweight index of backup metadata (e.g., file lists, timestamps, tags) in a warm storage tier like DynamoDB or S3 Standard.

- Build a parallel retrieval pipeline for urgent restores: Combine this with Glacier’s expedited retrieval option for a “critical subset” of data to bring services online faster while bulk restores continue in the background.

- Use deduplication before archival: Before pushing backups into cold storage. This reduces redundant data in long-term archives, lowering both storage costs and retrieval times during restores.

- Implement cross-region replication for compliance: For highly regulated workloads, replicate cold storage backups into another AWS region or even a different cloud provider. This mitigates risks from a region-wide AWS disruption or Glacier service degradation.

- Automate archival to actual usage patterns, not just age: Instead of using static lifecycle policies, use Lambda or Step Functions to dynamically decide when to move data to Glacier tiers based on business events (e.g., project closure, customer offboarding).

Tutorial: Archive Amazon EC2 Backup to Amazon S3 Glacier

This tutorial walks through how to automate the archival of Amazon EC2 backup recovery points (AMIs) managed by AWS Backup to Amazon S3 Glacier storage classes using an event-driven solution built with native AWS services. These instructions are adapted from an AWS blog post.

1. Architecture Overview

In this tutorial we specify that backups older than 30 days should be saved to Amazon S3 Glacier Instant Retrieval, which offers low-cost storage with millisecond retrieval times—suitable for scenarios requiring lower RTOs.

The solution uses AWS Backup, Amazon S3, EventBridge, Step Functions, Lambda, SQS, and Systems Manager Parameter Store. The workflow operates on a schedule and performs the following:

- Retrieves EC2 backup recovery points (AMIs) older than a specified number of days.

- Stores each AMI as a compressed binary object in an S3 bucket.

- Transitions the S3 object to a selected Glacier storage class using the Boto3 SDK.

- Deletes the corresponding recovery point from AWS Backup.

- Logs status information in an SQS queue and generates a consolidated log file.

2. Workflow Steps

The solution follows these steps:

- Scheduled trigger: An EventBridge rule runs the Step Functions workflow based on a user-defined CRON expression.

- Backup retrieval: A Lambda function lists recovery points from AWS Backup that are in the Completed state and older than the specified retention threshold.

- AMI storage: Using the CreateStoreImageTask API, the AMI is copied to an S3 bucket in standard storage as a .bin file. The path format includes metadata such as account ID, region, instance ID, and backup date.

- Storage class transition: A separate Lambda function uses Boto3 to copy the S3 object into a Glacier storage class (GLACIER, GLACIER_IR, or DEEP_ARCHIVE). Lifecycle policies are not used—transition is handled programmatically.

- Cleanup: After successful archival, the original recovery point is deleted from the backup vault.

- Logging: Execution results are posted to an SQS queue. Another Lambda function generates a summary report and saves it in the same S3 bucket under a logs/ directory.

3. Deploying the CloudFormation Template

Before deploying the solution, ensure you meet these prerequisites:

- Backup vault name: Identify the AWS Backup vault currently in use for your Amazon EC2 backups.

- Vault policy: Verify that the backup vault’s access policy allows the

DeleteRecoveryPointAPI action. - Test data: Ensure there are existing EC2 recovery points in AWS Backup to validate the setup.

- Permissions: You need IAM permissions to create AWS CloudFormation stacks and associated resources.

These requirements ensure the deployment runs smoothly and that the workflow has access to all necessary resources.

Deploying the Solution

AWS provides a prebuilt CloudFormation template to automate the deployment. This template provisions all the required resources in your selected AWS region, including S3 buckets, Lambda functions, Step Functions, EventBridge rules, and IAM roles.

- Launch the template: Use the provided link to launch the CloudFormation stack in the AWS Management Console. If you’re not already signed in, log into your AWS account. Select the region where your EC2 recovery points are stored.

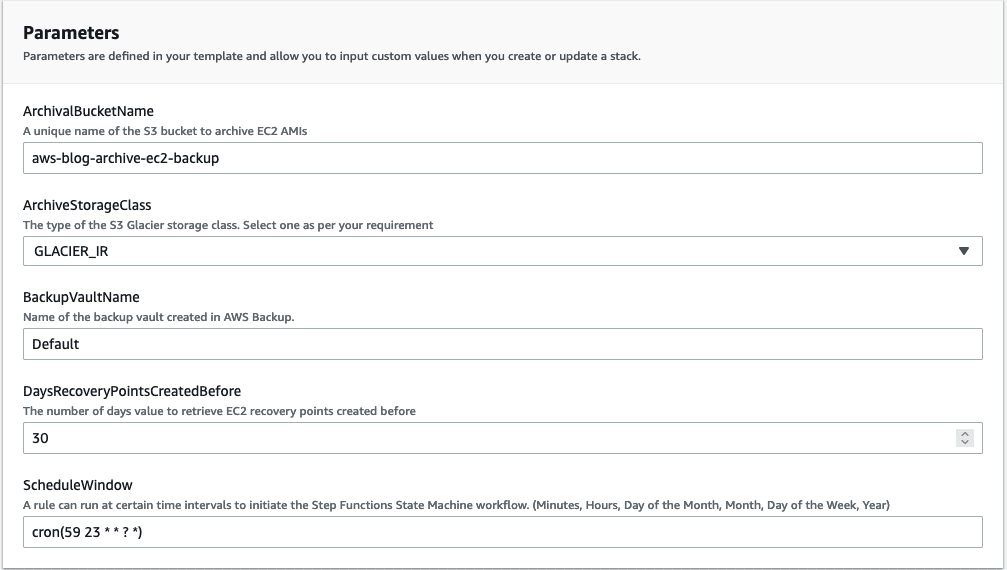

- Configure stack parameters: On the stack creation page, provide the following parameters:

ArchivalBucketName: A unique name for the S3 bucket to store archived recovery points.ArchiveStorageClass: Choose the S3 Glacier storage class (GLACIER_IR, GLACIER, or DEEP_ARCHIVE).BackupVaultName: Name of your existing AWS Backup vault.DaysRecoveryPointsCreatedBefore: Specify how many days old recovery points must be to archive them.ScheduleWindow: Define a cron expression to set the workflow’s execution schedule.

Source: Amazon

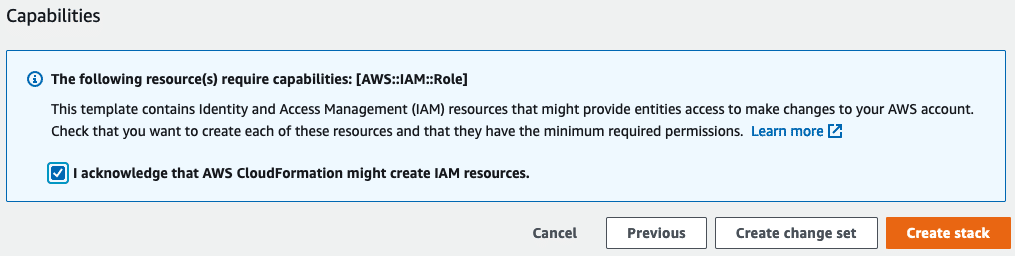

- Deploy the stack: Proceed through the configuration screens, acknowledge that the stack will create IAM resources, and select Create stack. CloudFormation will provision all required components within minutes.

Source: Amazon

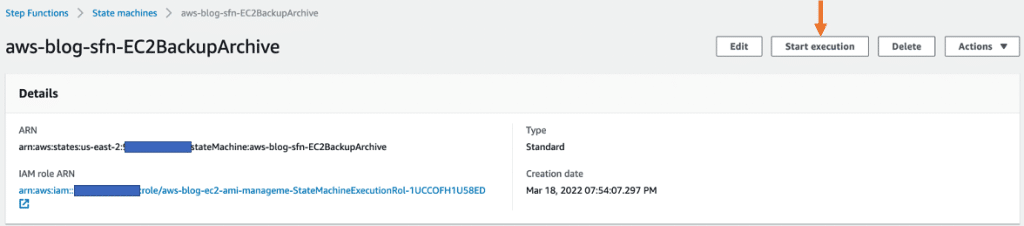

- Test the workflow: Once deployed, the solution runs automatically based on the schedule you provided. To test it manually, navigate to the Step Functions console, select the deployed state machine, and trigger a manual execution.

Source: Amazon

The workflow retrieves eligible EC2 recovery points, stores them as compressed objects in the specified S3 bucket, transitions them to your chosen Glacier storage class, and deletes the original recovery points from AWS Backup. It then generates a summary log file in the S3 bucket under a logs/ directory.

Best Practices for AWS Backup Cold Storage

Organizations should consider the following best practices to ensure the most effective backup strategy using cold storage in AWS.

1. Understand Cold Storage Requirements

Before adopting cold storage solutions within AWS Backup, assess organizational needs regarding data retention, compliance, and access patterns. Identify which datasets are required for regulatory reasons or infrequent business use, and determine the mandatory retention periods for each data type. Segregating frequently accessed backups from archival data allows for efficient policy assignment and cost management in AWS Backup.

Additionally, document the latency tolerances for any potential data restore operations. This establishes clear expectations around backup recovery times, especially since cold storage services like S3 Glacier have significantly longer retrieval latencies compared to standard storage. Understanding these requirements ensures that you choose the most suitable storage class and configure backup policies that align with internal and external obligations.

✅ Pro tip: AWS Backup only allows you to archive full snapshots (not incrementals) and they store them in a tier that costs as much as standard S3, while taking as long to recover as Glacier. So, if automated archiving is part of your strategy, it’s much more cost-effective to go with a tool like N2W, which can reduce those long-term storage costs by 92%.

2. Configure Lifecycle Policies Appropriately

Lifecycle policies in AWS Backup dictate when backups transition from standard to cold storage classes like S3 Glacier. These policies must be defined based on the organization’s access requirements and cost optimization goals. Automating the transition allows organizations to reduce manual overhead and ensures timely data movement, minimizing storage costs without sacrificing data availability during the transition period from warm to cold storage.

It’s important to review and adjust lifecycle settings periodically to align with changing business and compliance needs. For example, as regulatory requirements evolve, the retention period in cold storage may need adjustment. Consistent review of lifecycle policies ensures that backup data moves efficiently between storage classes, balancing cost and accessibility without incurring unexpected retrieval delays or compliance issues.

3. Optimize Backup Frequency and Retention

Optimizing backup frequency is key to reducing unnecessary storage costs in AWS Backup cold storage. Determine the minimum backup interval needed to meet business recovery objectives and compliance. Backing up data too frequently results in redundant copies and inflated storage costs, particularly when those copies age into cold storage classes like S3 Glacier or Glacier Deep Archive.

At the same time, set appropriate retention periods for each backup set. Retain only the necessary number of backup versions to meet legal and operational standards, while expiring and deleting older, unneeded backups. By tuning frequency and retention in tandem, organizations can efficiently manage backup volumes and keep storage expenditures aligned with actual risk and regulatory exposures.

✅ Pro tip: With N2W you can choose to set a different number of generations for DR snapshots vs regular backups, thus minimizing your DR costs, while maximizing your ability to have your critical data protected and available.

4. Use Access Controls and Encryption

Strong access controls must be enforced on AWS Backup vaults containing cold storage data to prevent unauthorized access or tampering. Use AWS IAM roles and policies to limit who can create, manage, and restore backups, ensuring that only approved personnel have access to sensitive archives. Implement multi-factor authentication (MFA) for critical actions to add a further layer of protection.

Encryption should be enabled for both data at rest and in transit. AWS Backup supports integration with AWS Key Management Service (KMS), allowing organizations to manage and audit encryption keys. Secure backup configurations guarantee data confidentiality, integrity, and compliance with regulatory frameworks, which often require end-to-end encryption and strict key management for long-term archival storage.

✅ Pro tip: Unlike AWS Backup, N2W supports full multi-region KMS and the ability to ‘switch out’ encryption key for added security.

5. Test Data Recovery Procedures

Regular testing of data recovery procedures is essential for ensuring reliable access to backups stored in cold storage. Periodic restore exercises help verify the integrity of archives and measure the time required to retrieve data from cold storage classes like S3 Glacier, where recovery may take hours. This allows IT teams to update disaster recovery documentation with accurate recovery time estimates and process details.

✅ Pro tip: N2W runs automated DR drills so you can test restores regularly—and sleep easy knowing they’ll work when it counts.

Through these tests, organizations can detect potential issues with backup configurations, missing permissions, or gaps in automation. They also ensure that staff are proficient in restore operations, which are especially critical during emergencies. Testing not only validates technical configurations but also supports compliance reporting and can reveal optimization opportunities in backup policies or cold storage usage.

Easily Store Backups to Cold Storage with N2W

Managing AWS Backup cold storage policies manually can get complicated fast—lifecycle rules, Glacier transitions, restore tests…it’s a lot. N2W makes it ridiculously easy:

- Automated Archiving: Instantly move (incremental) EBS snapshots or RDS backups into Glacier or Deep Archive with AnySnap Archiver.

- Click-to-Restore: Browse and restore individual files, full servers, or entire VPCs from cold storage—without waiting on clunky manual processes.

- Cost Visibility: See at a glance how much you’re saving with cold storage using built-in cost explorer dashboards.

- Cross-Cloud Flexibility: Need to restore AWS backups into Azure or Wasabi? No problem—cold storage doesn’t mean locked storage.

With N2W, you don’t just save up to 92% on storage costs—you keep control, speed, and compliance intact.

👉 Want to slash your AWS bill even further?Download the AWS Cost Optimization Guide and uncover practical ways to cut costs without cutting corners.