Better fault tolerance, high availability, and reduced cost are the Holy Grail for any developer and a top priority for any business wanting to succeed in today’s highly dynamic marketplace. Thanks to the advent of cloud computing, auto scaling can deliver those advantages and more. Auto scaling is the mechanism by which the computational resources allocated to an application scale up or down, at any given point in time, depending on the application’s load. Traditionally, scaling has been a manual and costly proposition. With the advent of cloud computing, however, that has now changed. Today, you can design auto-scalable applications that sustain increasing user requests without any downtime.

Before cloud computing, the idea of increasing resources on even a single server on the fly was laughable, leading to frequent application performance issues and crashes, and in turn causing potential business and financial losses. In Diagram 1, consider how auto scaling can be used to sustain peak load for an application. On weekends when user requests are quite low, the application would require just one server. On work days, two servers would be required for normal operation, while four would be needed at the end of each month.

Auto Scaling in DynamoDB

With the Amazon DynamoDB cloud database service, you must set a provisioned read and write capacity for a table or global secondary index (whether existing or new) at the database level. Here’s the problem with that approach. If you provision something too low, your application will have performance issues. It may even crash. If you provision something too high, your bill will skyrocket.

How can auto-scaling in DynamoDB address this issue and further enhance your cloud elasticity? The answer is simple. DynamoDB dynamically adjusts provisioned throughput capacity on your behalf, in response to actual traffic patterns under monitoring. As a result, a table or global secondary index can increase its provisioned read and write capacity to handle sudden increases in traffic, without throttling. Conversely, when the workload decreases, application auto-scaling decreases the throughput, so that you don’t pay for unused provisioned capacity.

Scaling Policy

By specifying scaling policies, auto-scaling can augment resources when application demand increases or decreases. Policies are defined for every table, global secondary index, or both, essentially wherever you want to dynamically scale up or down the read and/or write capacity as needed to accommodate the system load. Scaling policy also tracks target utilization, with tracking algorithms, at any given point in time and adjusts provisioned throughput in response to actual traffic.

Here’s how it works:

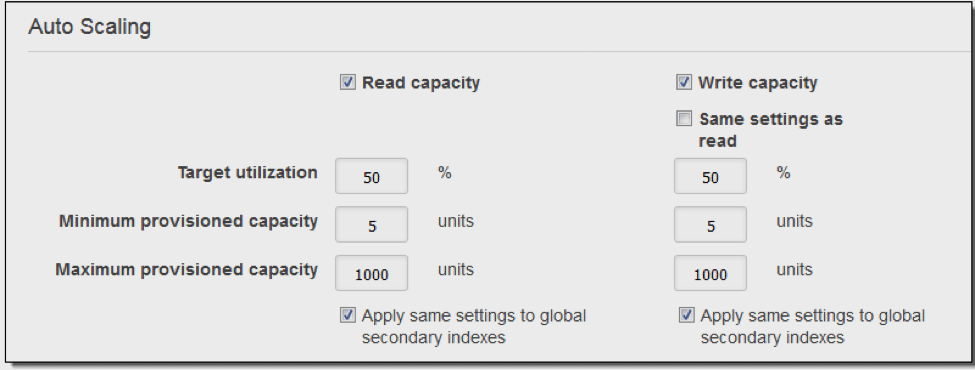

Target utilization is calculated as the ratio of real-time consumed capacity, to provisioned capacity, as defined by the administrator. Let’s say at one point in time, the consumed capacity is 700 units for read activities, meaning that target utilization is 700/1000 = 70%. However, as shown in Diagram 2, we have already defined the target utilization as 50%. Consequently, to bring the target utilization to 50%, the maximum provisioned capacity must be increased to 1400 units.

Diagram 2. Entering parameters

Components and Steps Involved in Auto Scaling

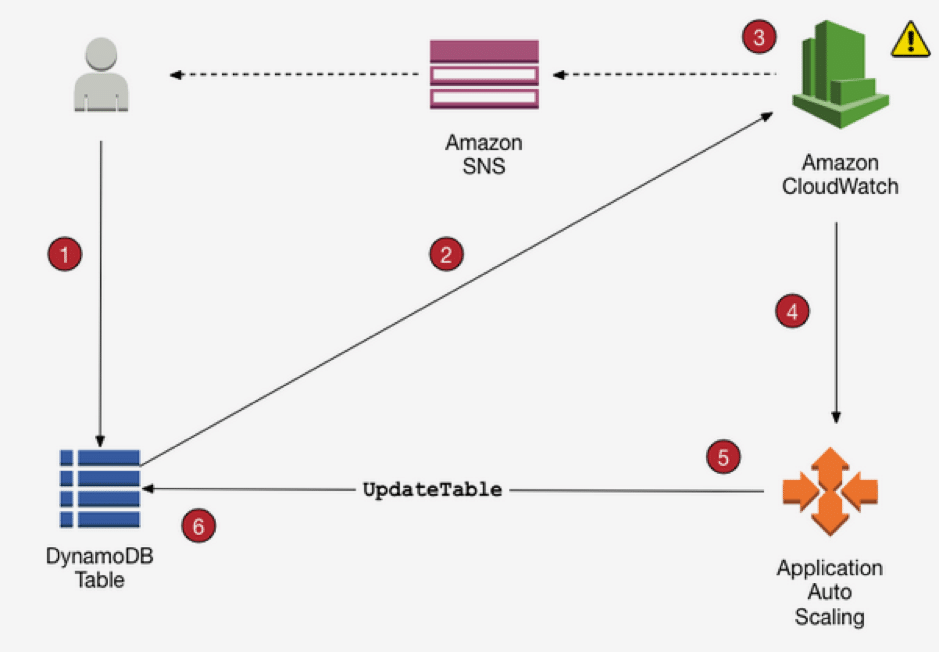

To better understand how auto-scaling works, here’s a look at the various components and steps involved in auto-scaling a single AWS DynamoDB table (Diagram 3).

- Step 1. A set of users accesses a DynamoDB table for which an auto-scaling policy has been configured.

- Step 2. The consumed capacity is monitored by Amazon CloudWatch, a tool that collects and tracks metrics, and triggers an alarm when a specified threshold is breached.

- Step 3. If the consumed capacity is greater than the configured target utilization (for a considerable period of time), then Amazon CloudWatch triggers an alarm received by an individual through the Amazon simple notification service (SNS) messaging service from Amazon Web Services (AWS).

- Steps 4 and 5. Amazon CloudWatch directs the auto scaling policy to issue an update table command to change the provisioned throughput.

- Step 6. The DynamoDB application program interface (API) consumes the request to update the table with new values of provisioned throughput capacity.

Diagram 3. Auto-scaling process

While these steps highlight just one cycle of auto-scaling resources, DynamoDB can actually scale up resources multiple times a day in accordance with the defined auto-scaling policy. However, there is a defined limit to how many times a day the read and write capacity units can be decreased—up to 4 times a day. Additionally, if no decrease has occurred in the past four hours, an additional dial down is allowed, effectively bringing the maximum number of decreases in a day to nine times (4 decreases in the first 4 hours, and 1 decrease for each of the subsequent 4-hour windows in a day).

Benefits of Auto Scaling

Auto-scaling has a number of key benefits, including:

- Better Fault Tolerance. Auto-scaling keeps a tab on server health. When an issue is detected, it can bring down the faulty server and replace it with a healthy one on the fly.

- High Availability. When the number of user requests is high, provisioned capacity auto scales to accommodate the increased load, maintaining the application performance and eliminating any availability issues.

- Commercial Savings. The dynamic scale up and down functionality that comes with auto-scaling has a pay-as-you-go model. Because you pay for only the resources you consume, the cost savings can be substantial.

Summary

Without a doubt, auto-scaling can effectively eliminate the guesswork involved in provisioning adequate capacity when creating new tables. It also reduces the operational burden of continuously monitoring consumed throughput and having to adjust provisioned capacity manually. Moreover, auto-scaling helps ensure application availability and reduces the cost associated with unused provisioned capacity. Such capabilities make this function, now available in Amazon DynamoDB, one that is sure to benefit both developers and businesses alike. Try N2WS Backup & Recovery Free.