Today you’re going to learn about why building a data lake is important for cloud optimization. We’ll discuss the kind of data you need and how to get started with building a data lake. And I’ll be sharing links to access the resources that I reference throughout this post. (hint: check out this post for a primer on data lakes)

Why might you use a data lake for cost optimization?

1. Complex Reporting

We often hear about customers wanting to create complex reporting for stakeholders and they need access to large sets of data to do this. So things like chargeback, showback, cost allocation, and utilization reports can all be solved with a data lake. And, as we are talking about optimization today, cost optimization is a big part of that.

2. Tracking Goals

Utilization data and recommendations for savings can be spread across an entire organization. This can make it hard to get a clear picture of where you’re making savings. Having a data lake will make it much easier to access this information from all over the place. So how do you know if you’re doing well at that optimization? How are you tracking your goals, your KPIs? If you don’t have one clear pane of glass to see your goals and track them efficiently, then that can make visibility difficult.

What kind of data should be in your data lake?

As I’m a cost expert, the first data we need is cost data. And, when it comes to cost data, there are three main options for where to get it with AWS.

1. CSV Billing

First there is your CSV billing data, this is often higher level and it’s simple to understand. But realistically, we want to have a bit more control.

2. Cost Explorer

You could also be using Cost Explorer, which is a native tool that allows you to filter and group your data and get insights into what you’re actually spending. But for our data lake we want to get a bit more granular.

3. Cost and Usage Report

The most granular report we can have is the AWS Cost and Usage Report (CUR). This is a really important service. While many customers take advantage of this, there are so many more that still don’t even know it exists.

This link will help you to set up your cost and usage report, the best way you can. There are a lot of tips on that page about setting up things like resource IDs, so you can see what resources are actually causing your spend. And when you look at it in hourly granularity, you can look at your use of flexible services such as RDS or EC2. Are you actually using the flexible services as they’re meant to be used?

Set up your CUR in a parquet format, rather than to default set it up as a CSV. Using the parquet format means that you can actually utilize Amazon Athena to get access to your data. And this is the foundation of the cost data that we're going to build.

The CUR is the foundation of the cost data that we’re going to build on. As we go, I’m going to give you a tip for each step. So for this first step, the tip is 👉 the granular, the better. When you’re setting up a cost data lake, you want to make sure that all of your data is super granular. The AWS Cost and Usage Report (CUR) does that for you, which is nice.

How to setup the proper structure for a cost data lake

When it comes to your business, we need to understand your organization. If you don’t know who owns what, how are you supposed to track utilization and optimization? You need an efficient account structure. The following account structure is one that we see many customers utilize and I used to use it too. I really want to emphasize this setup, because it is key to making sure that things, like those reports I mentioned, are as simple as possible.

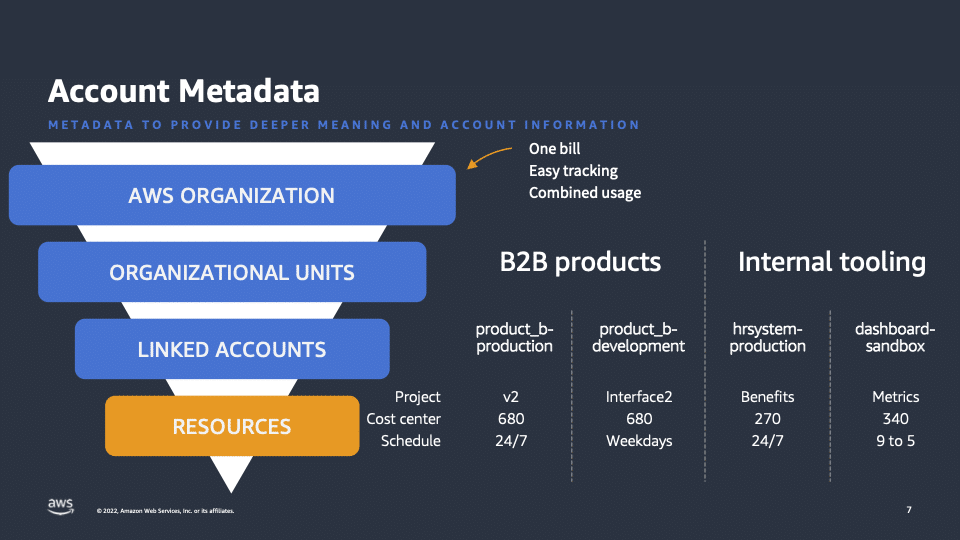

First of all, in this account structure, we start with our organization. This is often in your payer account and is a centralized hub that looks across your entire estate. Within this, we have our organizational units. These are naturally occurring groups of accounts. So they could be products, teams, whatever works best for your business. And within them, we have our linked accounts.

As you can see from the image above, our linked accounts have names such as production, development, or sandbox. And the key thing is that everything within that account is related to that name or appropriate tag (because you can tag accounts that you use in your organization). This simplifies things when it comes to tagging individual resources (EC2, S3, etc). So you don’t actually have to tag the resources with this kind of metadata because they inherit it from the account above.

Now to help you with this, check out the lab that we have, which is a way to collect that metadata from your organization and to connect it with your CUR (because the data is not in there natively). Things like tags, Organizational Unit (OU) name, and your account names can actually be injected into your CUR to make your reporting a lot simpler.

This is really important tagging because, if you have developers who are building out infrastructure, you don’t want to have to depend on them to make changes to the tags with this kind of data. Instead, leave tagging for things like security or other things that actually affect the resource. And shout out to Jon Myer on his pod where we talked about tagging here.

It’s so much easier to optimize resources when you know who owns them —and when you have data attached to them. So the tip for this step is 👉 have an ownership strategy.

Adding data from other services into the data lake

1. Inventory

So far we’ve talked about our cost data and our accounts but what about your actual resources? Similar to account metadata, we need to collect all this information and make sure it’s organized. Also, when I talk about services, I’m talking about a couple of different things in this situation. First, we have service data in general. This can be like an inventory of what you’re using. It could be simple as listing all of the EBS volumes that you have or listing any savings plans or other commitments you have at AWS. Ensuring you keep track of all this information is vital.

2. Recommendations

Then we have things like tooling, AWS Trusted Advisor, AWS Compute Optimizer, and Amazon S3 lens. I used to find it really annoying, when I was developer, if I owned three different accounts (like prod, dev, and test) and I had to keep logging into each one of them to go and get the data. But with a data lake, you can pull that information all together, so you can see across your entire application or project rather than each account.

3. Utilization

Lastly, we have utilization data. You may have services that you want to go into and understand how you’re utilizing them, which you may want to collect into one centralized place (something like Amazon CloudWatch). So what we’re recommending today, and the premise of this data lake for optimization is about ingesting this all into one centralized location. This leads into our third tip 👉 centralize your data.

It’s a lot easier if everything is in one place together, but it doesn’t have to be all in the same bucket. As long as you can connect it, then you have the power over all of your data. Normally, we suggest putting this all into Amazon S3. And the link that we have on this step is for a Collector Lab, which is super exciting because of new developments coming out for it to be multi-cloud as well.

Having all of this data together means you can connect it —not only can you connect your tags from your accounts to your costs— you can also link back to your savings plan information or to your Compute Optimizer recommendations. You have the power to make changes and to see the impact in order to streamline your business processes.

How to access information from a data lake

Now that we have all of the data we’re going to collect —cost data, account data, service— how do we access this information? When it comes to S3 data lakes, there are a couple of ways in which I like to do this.

1. Amazon Athena

The first is with Amazon Athena. This is a serverless database that you can use sequel on or Presto allows you to query data in S3. This is set up by default with the Cost and Usage Report. There is a handy little file that is in your bucket in your CUR that helps you set up your database for this. And the other labs that I’ve mentioned build on this concept —that all your data can be accessed through Athena. (hint: learn more about Athena in our Big Data Analytics post)

This means that you can create queries and reports and you can export that data to do your reporting. Plus, once you have these queries made, you can automate them. So you can use something like lambda to automate the query and email it to someone, for example. That’s what I often used to create automated reports for finance and send that email out every month for the stakeholders or the business managers. Automating it so they get the data on time, and it’s accurate.

2. Amazon QuickSight

If you want to mess around with the data a bit more and have more flexibility, you can use Amazon QuickSight to visualize information. QuickSight is the AWS Business Intelligence (BI) tool.

User access to data in data lakes

The tip with this final step is 👉 only give access to the data that your users need. Follow the link here for our cloud intelligence dashboards. These are really cool dashboards that allow you to visualize your costs and they’re based on Athena queries. So, as you play with this information, you’ll be able to see your spend and optimization. And I’ll touch on these in a second.

First, let me clarify the tip, “only give access to data your users need”. What I’m talking about here is making sure —from an operational and security point of view— that people can see this cost data who need to see the cost data.

Data lake: an operational perspective

So for example, when I used to create reports for our finance team, they didn’t care about the nitty gritty elements. They cared about the chargeback model and the data they could put straight into SAP. So when I was talking to finance, I’d say:

- What fields do you need?

- What kind of data do you need?

- And can we automate that?

This allowed them to just take the data and put it straight into their services. The same goes for managers, they care about all of their accounts. They may want to split up the report by service or resource, depending on what they’re doing. So make sure you’re having discussions with people to find out what data operationally can help them do their job.

Data lake: a security perspective

Then from a security point of view, restricting access to data can be quite key. Some businesses don’t care about it, when it comes to sharing cost data. However, others need to be quite restrictive. And for that we have Row Level Security (RLS), which enables you to tag those accounts that we mentioned earlier and restrict the data users can access. This means they can go into QuickSight and see their own data but not anyone else’s, which is very cool.

Th tl;dr on data lakes for cost optimization

I’ve covered quite a lot so let’s summarize this whole data lake thing that I’ve been talking about. First we’re pulling together all data for cost data and optimization opportunities. The key point is that when you have all this data in one place —and it’s automatically pulled together— this increases your speed and accuracy when providing reports.

Then, looking at the reports, we have:

- Basic CSV reports, that you can be exporting to finance or to your stakeholders

- Cloud intelligence dashboards that allow you to go in, have a look at your data and try different things, filter groups, and better understand your numbers

- Compute Optimizer or Trusted Advisor dashboards that use the data from these tooling sections so that you can make accurate decisions about where to optimize first.

So for example, if you have 1000s of recommendations from the Compute Optimizer, maybe you want to narrow that scope down to just the biggest hitters, so that you can make the most impact. What’s key in this case, is that account data. Remember, we need to understand who owns what so we can actually make these savings.

Let’s recap these tips:

- The granular, the better, the CUR does this for you. But when you are getting other tooling data (maybe from third-party toolings) go for as detailed as possible so that you can understand what you’re actually doing.

- Have an ownership strategy, spend the time making sure that you don’t have any orphaned accounts or orphaned resources. This can become a headache later. So you want to try and avoid that.

- Centralize your data, make it as easy for you as possible by bringing everything together, so you have that one pane of glass.

- Only give access to the data your users need, make their lives easier, make your lives easier, by sharing information that’s going to help them do their job.

- And finally, a bonus tip, automation is key through all of these stages. We’ve kind of mentioned about lambda and automation with the CUR. Also a lot the labs that I’ve recommended will help you do this. But automation is something that we like to strive for, so that you can reuse processes.

So if you’ve enjoyed learning about cost optimization with data lakes, I have a weekly Twitch show called The Keys to AWS Optimization. And we discuss how to optimize —whether optimizing a service or even optimizing from a FinOps point of view— and we have a guest every week. Also, if you have any questions in the future, I’m at AWSsteph@amazon.com or tweet me at @liftlikeanerd.

Steph is one of the original members of the FinOps foundation. As a Senior Commercial Architect at AWS, she works with customers to help them manage utilization, spend, and to improve financial processes. Steph is also the host of a weekly Twitch show called The Keys to AWS Optimization, where she shares stories and solutions to help customers unlock their AWS Costs.