Cloud lock-in: that dreaded term that many organizations are scared of. Cloud lock-in happens when you’ve got so many important IT assets in one cloud and it’d be nearly impossible or not financially reasonable to move them. Many organizations would like the flexibility to use different clouds for different services.

Multi-cloud provisioning and configuration is a big topic but one of the tasks an organization will inevitably come across is VM migration. All cloud environments treat VMs (IaaS) a little differently but luckily they all have a least common denominator (the virtual disk). Every VM anywhere has to have a virtual disk somewhere whether it’s a VHD, VHDX or VMDK. That virtual disk, although a little bit different in each scenario, can be converted back and forth which means we can migrate VMs with their disks to just about anywhere we want! All of the VM-specific configuration items can easily be manipulated depending on what environment the VM is running in.

In this article, we’re going to focus on migrating a Windows VM in Azure to AWS. I will be performing a simple migration for a VM with a single virtual disk. We’re not going to be messing VMs with multiple disks attached. I’ll also be using PowerShell to perform the migration. Here’s how to get started using PowerShell with AWS There are a few other ways such as using the Azure Portal and AWS console or using the AWS CLI but why go back and forth when you can use one tool to do everything?

Fortify your cloud across every critical dimension.

- Efficiency + Optimization

- Security + Control

- Orchestration + Visibility

Prerequisites

Before we get too crazy, let’s first ensure you meet all of the prerequisites to make this migration happen. I’ll expect you to:

- Have an Azure and AWS account

- An Azure storage account

- Have at least one Windows VM running in Azure

- Have an AWS S3 bucket available to store VM images

Azure Cloud Shell

Since we’re going to be moving around some huge files, there’s no sense to use your Internet connection to be the middleman. We have to transfer potentially hundreds of gigs of data. Rather than downloading all of that from Azure and then back up to AWS, why not just keep it all in the cloud and let Microsoft and Amazon’s fast Internet connections handle it! For this article, I’m going to be using the

Azure Cloud ShellInstalling the AWSPowerShell Module

Since we’re going to need to work with AWS obviously, we’ll need to install the AWSPowerShell module in Cloud Shell. We can do this by using Install-Module. We might as well authenticate to AWS while we’re at it too.

PS> Install-Module -Name AWSPowerShell -Force

PS> Set-AWSCredential -AccessKey XXXXXXXX -SecretKey XXXXXXX -StoreAs Default

PS> Set-DefaultAWSRegion -Region us-east-1VM Preparation

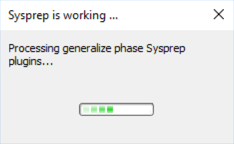

Since some components of the VM are going to be recreated when the VM goes from an Azure VM to an Amazon Machine Image, it’s important to first prepare the Azure VM. Depending on what kind of configuration you’re going to have the AWS VM in will depend on what you’re going to do here. Some common examples are ensuring the IP address of the VM is set to DHCP, removing unnecessary adapters, etc but the most important part is sysprep. Go ahead and RDP into your Azure VM, bring up a command prompt and run sysprep.

C:\> C:\Windows\System32\Sysprep\sysprep.exe /generalize /oobe

Sysprep will remove unique information from the VM using the generalize switch and bring up a setup menu for you in AWS once it gets there. Once sysprep is done, it should automatically shut the VM down. Once the OS in the VM has shut down it’s then a good idea to shut down the VM as well.

PS> Get-AzureRmVM -ResourceGroupName adbtesting -Name PSWA | Stop-AzureRmVm -Force Azure File Share

Since we’re not about to use our own environment for this migration, let’s use Microsoft’s in the form of their Azure Files service. We need a place that can support a few hundred gigabytes. The Azure Cloud Shell has some space, it’s not near enough. For that, we need use Azure storage but we need to mount it as a local drive within Cloud Shell. Azure Files is a great service that allows us to do that. In the Cloud Shell, let’s create a temporary Azure File Share using an existing storage account. We’ll tear this down when we’re done.

$storageAccount = Get-AzureRmStorageAccount -ResourceGroupName adbtesting -Name adbtestingdisks656

$storageKey = (Get-AzureRmStorageAccountKey -ResourceGroupName $storageAccount.ResourceGroupName -Name $storageAccount.StorageAccountName | select -first 1).Value

$storageContext = New-AzureStorageContext -StorageAccountName $storageAccount.StorageAccountName -StorageAccountKey $storageKey

$share = New-AzureStorageShare -Name migrationstorage -Context $storageContextOnce the share is created, we then need to mount the share to make it available as a drive in Windows. To do that, we can use the New-PSDrive command and create a temporary X drive as shown below.

$secKey = ConvertTo-SecureString -String $storageKey -AsPlainText -Force

$credential = New-Object System.Management.Automation.PSCredential -ArgumentList "Azure\$($storageAccount.StorageAccountName)", $secKey

$shareDrive = New-PSDrive -Name X -PSProvider FileSystem -Root "\\$($storageAccount.StorageAccountName).file.core.windows.net\$($share.Name)" -Credential $credential -PersistAWS Preparation

We now need to do some preparation on the AWS side of things. We’ll be using AWS’s VM Import/Export service. It requires a service role and a policy assigned to it to give the service permission to import the VM’s image. To do this, we’ll need to create two JSON files and from within Cloud Shell use them as input into a couple PowerShell commands. Go ahead and create two files locally called servicerole.json and policy.json as shown below. Be sure to replace disk-image-file-bucket in the policy JSON with the S3 bucket name that you’ll be uploading the image to.

servicerole.json

{

"Version": "2012-10-17",

"Statement": [

{

"Effect": "Allow",

"Principal": { "Service": "vmie.amazonaws.com" },

"Action": "sts:AssumeRole",

"Condition": {

"StringEquals":{

"sts:Externalid": "vmimport"

}

}

}

]

}

policy.json

{

"Version": "2012-10-17",

"Statement": [

{

"Effect": "Allow",

"Action":[

"s3:GetBucketLocation",

"s3:GetObject",

"s3:ListBucket"

],

"Resource":[

"arn:aws:s3:::disk-image-file-bucket",

"arn:aws:s3:::disk-image-file-bucket/*"

]

},

{

"Effect": "Allow",

"Action": [

"ec2:ModifySnapshotAttribute",

"ec2:CopySnapshot",

"ec2:RegisterImage",

"ec2:Describe*"

],

"Resource": "*"

}

]

}Save these to your local disk somewhere. We’ll now need to upload these files to the Azure File Share we created earlier. For instructions on how to do that, you may refer to the Azure documentation. Once the JSON files are in the Azure File Share you just created you can now reference them to create the service role and assign the role policy to the service role as shown below.

PS> New-IAMRole -RoleName vmimport -AssumeRolePolicyDocument (Get-Content -Raw -Path X:\servicerole.json)

PS> Write-IAMRolePolicy -PolicyDocument (Get-Content -Raw -path X:\policy.json) -PolicyName vmimport -RoleName vmimportBegin the download

We’re now ready to finally do the virtual disk image transfer. For this step, we first need to figure out where the VHD that’s attached to our Azure VM is. To do that, we can use the Get-AzureRmVm command. The URI for the VHD is buried in a few different properties but we can easily pull it out with PowerShell. Be sure to change the resource group name!

$vm = Get-AzureRmVM -Name PSWA -ResourceGroupName ADBTESTING

$vhdUri = $vm.StorageProfile.OsDisk.Vhd.UriOnce we know the VHD URI, we can then use the Save-AzureRmVhd command to download it to our mounted Azure File Share drive.

$localVhdPath = "$($shareDrive.Name):\$($vm.Name).vhd"

Save-AzureRmVhd -ResourceGroupName $vm.ResourceGroupName -SourceUri $vhdUri -LocalFilePath $localVhdPath -NumberOfThreads 32

Downloading

1.7% complete; Remaining Time: 00:23:44; Throughput: 112.4Mbps

At this point, you’re going to wait awhile. Depending on the size of the VHD this can take up to 30 minutes or so.

Import into AWS

Once the VHD has been saved “locally”, we now need to upload it to our S3 bucket. This is the step that’s going to take awhile. Regardless if you’re using a direct connection from Azure to AWS, my 127GB VHD took about 45 minutes to transfer over successfully. Luckily, we can use the Write-S3Object command which will automatically perform the upload via multipart upload meaning the speed will be better and will be more resilient. Again, be sure to change the S3 bucket name.

Write-S3Object -BucketName adambbucket -File $localVhdPath -Key pswa.vhd

Uploading

File X:\PSWA.vhd...0%

[We finally are able to create an AWS image. To create the AMI, we’ll have to convert the VHD into an AMI. The PowerShell command to do that is Import-EC2Image. Below is a quick snippet on how to do that but if you’d like more information about this command, AWS has a great writeup on it.

$container = New-Object Amazon.EC2.Model.ImageDiskContainer

$container.Format = 'VHD'

$container.UserBucket = New-Object Amazon.EC2.Model.UserBucket

$container.UserBucket.S3Bucket = 'adambbucket'

$container.UserBucket.S3Key = 'pswa.vhd'

$params = @{ ClientToken = 'idempotencyToken'; Platform = 'Windows'; LicenseType = 'AWS'; DiskContainer = $container }

$task = Import-EC2Image @paramsThis will kick off the import task which may take awhile. To ensure you keep tabs on this process, we can use the Get-EC2ImportImageTask command to periodically check on the status.

PS> Get-EC2ImportImageTask -ImportTaskId $task.ImportTaskId

Architecture :

Description :

Hypervisor :

ImageId :

ImportTaskId : import-ami-fhcfkadi

LicenseType : AWS

Platform : Windows

Progress : 2

SnapshotDetails : {}

Status : active

StatusMessage : pendingIf you want to get fancy in your script, we can either create a PowerShell while loop to continually check the status and report back when it’s done!

while ((Get-EC2ImportImageTask -ImportTaskId $task.ImportTaskId).StatusMessage -ne '?????') {

Write-Host 'Waiting for image to import...'

Start-Sleep -Seconds 10

}

Write-Host 'Image import complete!'This process will take awhile so go grab lunch. Once done though, the entire migration process is over! You now have a VM running in AWS that was just running in Azure.

Cleanup

Since I’d rather not get charged for resources I won’t be using after this migration, it’s important to clean up. During the migration process, we created an Azure File Share to temporarily store the VHD before it was transferred to AWS. We don’t need that anymore so I’ll now clean that up with PowerShell.

PS> Remove-AzureStorageShare -Name migrationstorage -Context $storageContextSummary

Migrating VMs can be a daunting task. In this article, we covered only the basics. We were able to get a VM moved from Azure to AWS but we assumed a lot. There are lots of different bits and pieces you’ll have to look at before attempting to move production VMs like this. If you’re going to be migrating lots of Azure VMs, I’d suggest taking a look at the Azure Server Migration Service. It was built to support large VM migration scenarios and will be a better option instead of the ad-hoc approach we’ve taken here.

Declan is a Channel & Alliance rep for N2WS. When he's not helping customers optimize their cloud environments and writing easy-to-understand technical content, you can find him spending time on the golf course, improving his game.

Securing your AWS VMs with N2WS Backup & Recovery

N2WS Backup & Recovery, a cloud-native backup solution is built specifically for AWS and Azure and provides the missing pieces once your VM’s are migrated. N2WS has the capability to scan your AWS resources (EC2, RDS, DynamoDB, Aurora, Redshift) automatically and schedule them for backup. N2WS also provides instant recovery, DR across both regions and accounts, alerts, reports, and many other features. N2W already helps hundreds of enterprises and service providers protect valuable data and mission-critical applications in the AWS cloud.