AWS Fargate is being hailed as a game-changer in the world of containerized microservices. In much the same way that the cloud has freed developers from managing the VM infrastructure, the Fargate service abstracts and automates the launching and orchestration of Docker or Kubernetes containers—letting developers take full advantage of the latest in cloud-native app architectures without worrying about task networking, scaling, and other behind-the-scenes processes. This blog describes the Fargate service and how it fits into the container ecosystem.

Containers and Their Benefits

According to TechTarget “[c]ontainers are packages that rely on virtual isolation to deploy and run applications that access a shared operating system (OS) kernel without the need for virtual machines (VMs).” The container image statically describes everything needed to run an application: code, runtime OS, system tools, system libraries and settings. During runtime the container engine reliably executes the image—across any type of infrastructure.

Containers address several major app development and deployment challenges:

- Portability: By encapsulating all app files and software dependencies, container images become building blocks that can be deployed reliably on any compute resource, from a laptop to production servers to any private or public cloud infrastructure. Applications move smoothly and perform robustly through development, test, and production cycles.

- Resource efficiency: Each package running on a VM contains its own complete OS kernel. By contrast, multiple containers can share a single OS kernel on a single instance, with each container specifying the exact amount of required memory, disk space, and CPU. Thus, each server can host many more containers than VMs. In addition, with their fast boot times, containers support rapid scaling up and down of apps, for further optimization of resources.

- Flexibility: Taking a microservices approach, complex apps can be split into multiple containers (e.g., database, front end, server side, etc.). This modularity makes apps easier to manage: changes can be made to one or more modules with no need to rebuild the entire app. In addition, the modular approach, with on-demand instantiation, further enhances resource efficiency (see above).

Introducing Fargate

Properly orchestrating container clusters is critical to app robustness and several popular container orchestrators have emerged, such as Kubernetes, Swarm Mode, and Amazon Elastic Container Service (ECS). However, managing these orchestrators is not trivial, requiring specialized devops knowledge as well as careful monitoring of resource usage since they do not automatically provision or scale the VMs on which the containers are running. In order to ensure app performance and availability, it is not unusual to overprovision capacity and thus pay for resources that aren’t needed all the time.

In order to make containers truly cloud-native, AWS has introduced Fargate, which allows you to run Docker containers via Amazon ECS (Elastic Container Service) without having to manage the underlying infrastructure of EC2 servers or clusters. Fargate will also be made available during 2018 for Amazon Elastic Container Service for Kubernetes (EKS), AWS’ Kubernetes-as-a-service. This post shows you how to deploy your container without having to provision any other AWS server or management resources, all from the AWS Management Console.

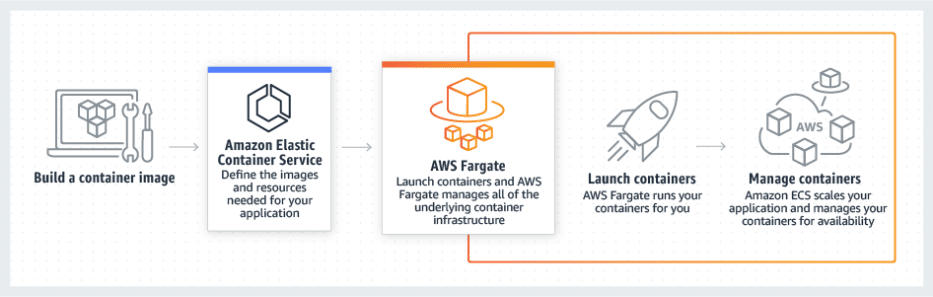

As shown below in Figure 1, in order to use Fargate you first package the app as a container by building a container image and then define the app as you would for any Amazon ECS task, specifying the CPU and memory requirements as well as the networking and user access policies. You can then choose one of two ECS launch types: Fargate or EC2. If you choose Fargate, the service launches the containers and automatically manages them for you during runtime. You are charged only for the underlying EC2 instances and related resources actually used.

Note: Should you want or need more granular control over the container runtime infrastructure, you can choose the EC2 launch type instead and run the containers on your own self-managed EC2 instances, including reserved instances and even spot instances.

Fargate is fully integrated with other AWS services such as the Application Load Balancer (ALB). If you have configured the service for Application Load Balancing, each ECS task launched by Fargate is registered with the load balancer and traffic is automatically distributed across the instances in the balancer. Similarly, you can use Amazon Identity and Access Management (IAM) to associate an IAM role with containers in an ECS task launched by Fargate.

Fargate pricing is based on the memory and CPU required to run a task, by the second and with a minimum of 60 seconds. In addition, there will be charges for any complementary resources launched, such as load balancers.

Amazon Fargate and Task Networking

Although it abstracts the management of underlying instances, Fargate offers significant control over network configuration and policies. Fargate works seamlessly with Amazon VPC (Virtual Private Cloud), the networking layer for Amazon EC2. VPCs are logically isolated virtual networks into which you launch your AWS resources. You can define public subnets for resources that must be connected to the internet, and private subnets for resources that do not require internet access. The VPC subnets support multiple layers of security, including security groups and network access control lists (ACL). (VPC Capture & Clone feature of N2WS allows AWS users to bootstrap other regions with an idential infrastructure setup. It is quick and easy to use and keeps your recovery environment ready for identical restore.)

When running tasks in Fargate, there are two networking options:

- Local (container) networking: When launching multiple containers as part of a single task, they can communicate with each other over a shared elastic network interface (ENI) in a special container networking mode called awsvpc.

- External networking: When containers are communicating with servers outside the task, external networking must be used. A task’s external network is configured by modifying the settings of the VPC in which the task is launched.

External networking has higher latency and greater security exposure than local networking. However, most containerized apps will make use of both networking options in order to meet the needs of their multiple-tier architectures.

Amazon Fargate vs. Amazon ECS and Amazon EKS

So if you’re running containers on AWS, when should you use Fargate and when Amazon ECS or Amazon EKS?

Final Note

All the major public cloud service providers provide container orchestration services. Since late 2017 Microsoft Azure has been providing fully managed container services: Azure Container Service and Azure Kubernetes Service. Google claims that everything at Google, from Gmail to YouTube to Search, runs on a container and GCP offers Kubernetes Engine as a managed, production-ready environment for deploying containerized applications.

AWS Fargate, however, is considered the service that is leading the way for the true and complete abstraction of containerized apps from their underlying infrastructure. It cuts through the “jungle” of clusters of EC2 container instances, each instance hosting multiple and different tasks, and each task possibly running several containers—to provide a brilliantly simplified view of each and every container as a single machine. So get out there and have some fun with Fargate.